W. Wang1, M. Caselle1, T. Boltz1, E. Blomley1, M. Brosi1, T. Dritschler1, A. Ebersoldt1, A. Kopmann1, A. Santamaria Garcia1, P. Schreiber1, E. Bründermann1, M. Weber1, A.-S. Müller1, Y. Fang2

1Karlsruhe Insitute of Technology KIT, 2Northwestern Polytechnical University

IEEE Transactions on Nuclear Science

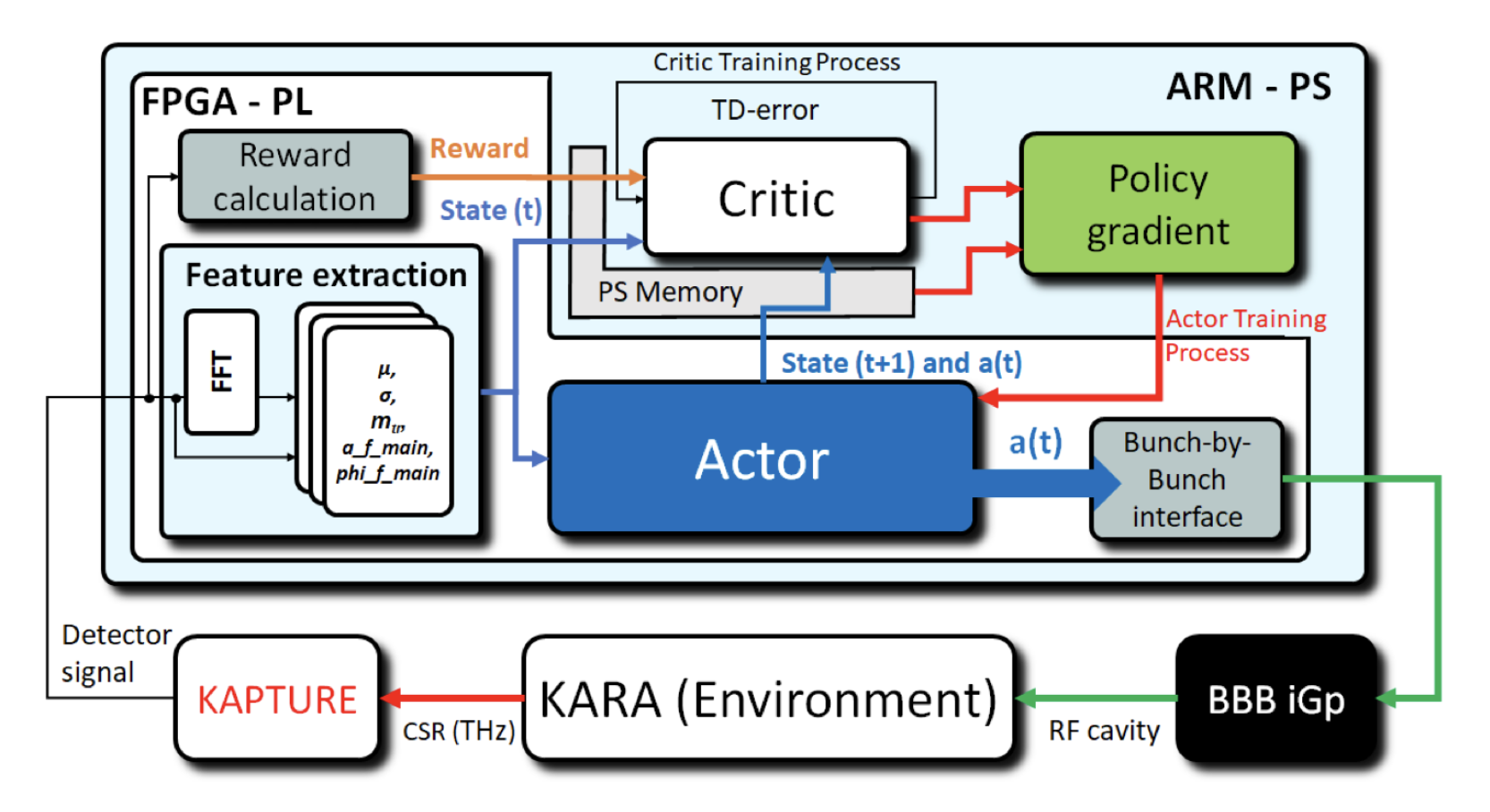

Abstract Coherent synchrotron radiation (CSR) is generated when the electron bunch length is in the order of the magnitude of the wavelength of the emitted radiation. The self-interaction of short electron bunches with their own electromagnetic fields changes the longitudinal beam dynamics significantly. Above a certain current threshold, the micro-bunching instability develops, characterized by the appearance of distinguishable substructures in the longitudinal phase space of the bunch. To stabilize the CSR emission, a real-time feedback control loop based on reinforcement learning (RL) is proposed. Informed by the available THz diagnostics, the feedback is designed to act on the radio frequency (RF) system of the storage ring to mitigate the micro-bunching dynamics. To satisfy low-latency requirements given by the longitudinal beam dynamics, the RL controller has been implemented on hardware (FPGA). In this article, a real-time feedback loop architecture and its performance is presented and compared with a software implementation using Keras-RL on CPU/GPU. The results obtained with the CSR simulation Inovesa demonstrate that the functionality of both platforms is equivalent. The training performance of the hardware implementation is similar to software solution, while it outperforms the Keras-RL implementation by an order of magnitude. The presented RL hardware controller is considered as an essential platform for the development of intelligent CSR control systems.

...